Psychopathic AI Is Now a Reality!

Meet Norman, the Psychopathic AI

Norman is an algorithm who was created alongside a group of other AI’s, and the difference is? Norman is a psychopathic AI named after the movie murderer Norman Bates from the film Psycho, has an extremely bleak view of the world. Obviously, Norman is not able to take physical action, but if ever it was let loose it could prove great a threat anyway.

The others in the collection of algorithms were fed data and information that was either happy or just neutral. However, with Norman they showed him some of the worst situations they could find, the majority of it being gruesome deaths and murders that had been posted on Reddit. The group was later showed seemingly incoherent images of splodges that are typically used by psychologists to identify an individual’s mental state. While the majority of the AI’s saw happy things such as birds and sunny days, Norman saw grizzly scenes of death and destruction, in the exact same photo.

As AI grows ever closer to becoming just as lifelike as us signs of psychopathic capabilities are extremely worrying. They appear to function similar to humans in the way that they are heavily influenced by what they intake and see. The glaring difference obviously is that, although dangerous a human psycho is rarely able to create mass destruction. In fact, its said that there are many more psychopaths among us than you may think. However, with a psychopathic AI they are staggeringly smarter than any human, therefore may be able to act on their terrifying conclusions of the world much more effectively than any human. Ultron vibes anyone?

What could this potentially mean for the future?

It goes without saying that the people developing these algorithms will be extremely careful in future and will most likely create a fail-safe. We now know that artificial intelligence is highly influenceable, almost like a child, just an all-knowing child.

The problem is not arising from just Norman and his desensitization to murder and death. In May last year, an AI was created to be used by the US court for risk assessment. It was later found that it had a substantial bias towards to people of color, viewing them as much more dangerous due to invalid information that it had been given. Certain people are actually worried not only by the information the digital minds are given but also the people make them, with many stating that anyone could apply their own incorrect agenda to an AI. Implementing great oversight over the creation of the algorithms to ensure that the developers do not intentionally give the AI incorrect ideas can theoretically prevent these problems.

Another large study showed that AI’s brought shown information provided by Google news, actually made the bot sexist to a degree, answering many questions with remarks about women belonging at home among with other things.

The developers of future AI need to take great care with the information they provide, or there will at some point be a disaster which will turn everyone against what is actually a wonderful innovation.

You might also like

Robots disobedience already a fact

Can robots actively disobey humans? Researchers from Tufts University in Massachusetts are training robots to making a decisions and being able to disobey humans. It could mark the era of

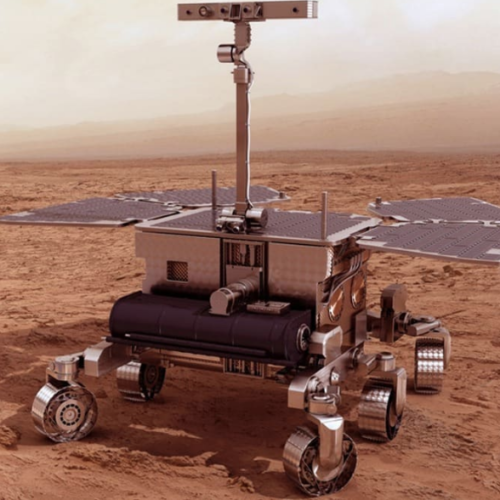

Robots Designed For Life on Mars!

A team of researchers over at Airbus in Stevenage have completed development on a robot built around the idea of searching for life or any sign of habitability on the

TITAN the ROBOT

As a first robot on our site, we’re introducing the most famous one – TITAN the ROBOT. Titan is from the UK. He is quite a big deal in the